MCP Integration

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ User message ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

'q': quit, Alt+Enter: newline

> calculate the relative performance increase from Claude 3.5 Sonnet to DeepSeek R1 in

https://krasserm.github.io/2025/02/05/deepseek-r1-agent/

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Model response ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

To calculate the relative performance increase from Claude 3.5 Sonnet to DeepSeek R1, I will:

1. Scrape the page for any benchmarking results (tables, figures, numbers) comparing both models.

2. Identify a benchmark or aggregate where both Claude 3.5 Sonnet and DeepSeek R1 are scored.

3. Calculate the relative increase:

(relative increase = (DeepSeek R1 score - Claude 3.5 Sonnet score) / Claude 3.5 Sonnet score)

Let me extract the relevant benchmarking data from the provided page first.

╭─ Code action ────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│1 frommcpgen.firecrawl.firecrawl_scrapeimportfirecrawl_scrape,Params│

│2 │

│3 url='https://krasserm.github.io/2025/02/05/deepseek-r1-agent/'│

│4 params=Params(url=url,formats=["markdown"],onlyMainContent=True)│

│5 page_data=firecrawl_scrape(params)│

│6 page_data[:1200]# Show a snippet for analysis│

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Accumulated token usage: input=2.71k, thinking=0, output=223, cache_write=0, cache_read=2.69k; Accumulated costs: 0.003

USD

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Execution result ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

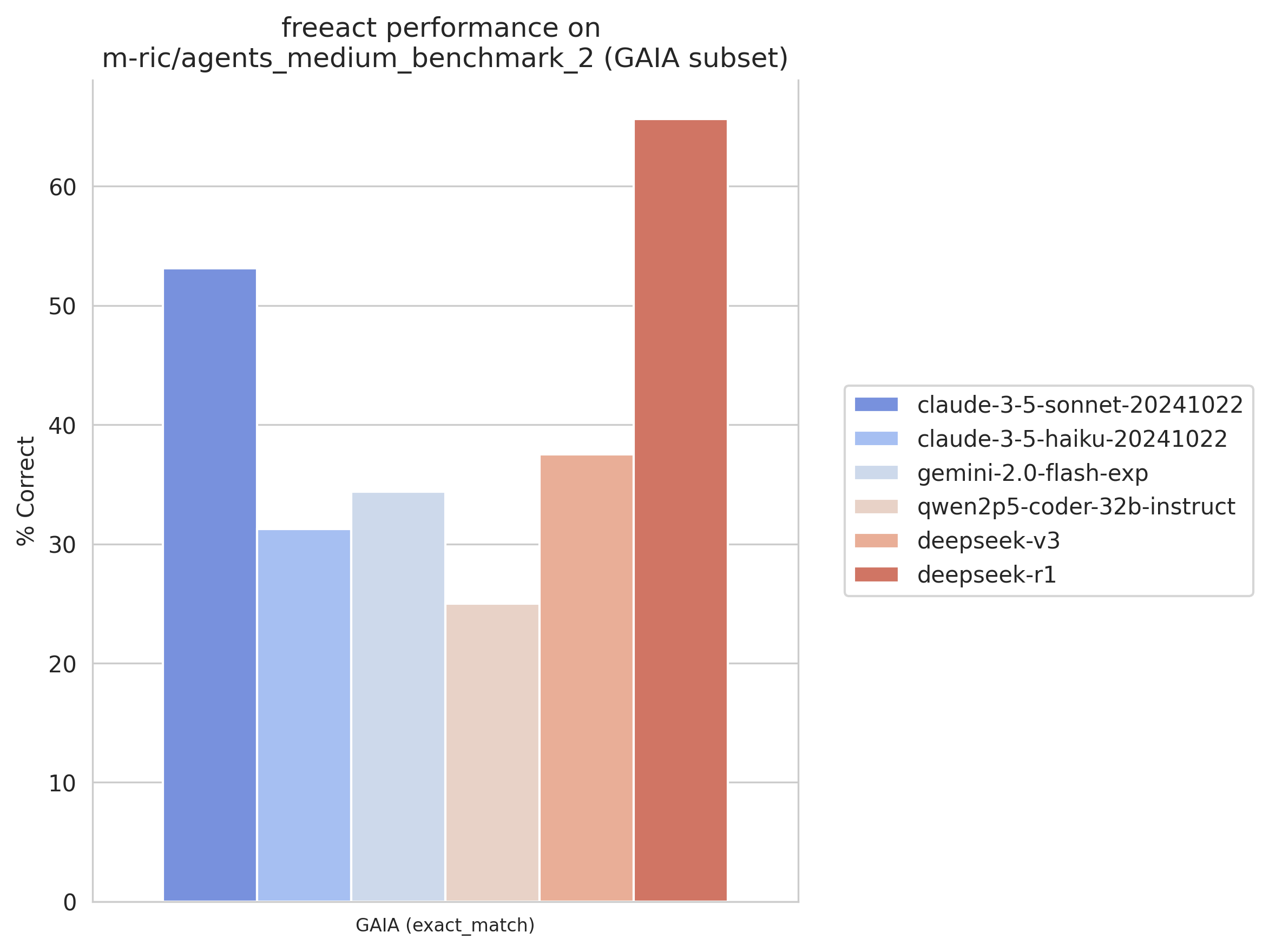

'I ran a quick [experiment](https://github.com/gradion-ai/freeact/blob/wip-legacy-pre-reasoning/evaluation/README.md)

investigating how DeepSeek-R1 performs on agentic tasks, despite not supporting tool use natively, and I was quite

impressed by preliminary results. This experiment runs DeepSeek-R1 in a single-agent setup, where the model not only

plans the actions but also formulates the actions as executable Python code. On a subset1 of the GAIA validation split,

DeepSeek-R1 outperforms Claude 3.5 Sonnet by 12.5% absolute, from 53.1% to 65.6% correct, and other models by an even

larger margin:\n\n\n\nThe experiment followed

model usage guidelines from the [DeepSeek-R1 paper](https://arxiv.org/abs/2501.12948) and the [model

card](https://huggingface.co/deepseek-ai/DeepSeek-R1#usage-recommendations): Don’t use few-shot examples, avoid adding a

system prompt, and set the temperature to 0.5 - 0.7 (0.6 was used). You can find further evaluation details

[here](https://github.com/gradion-ai/freeact/blob/wip-legacy-pre-reasoning/evaluation/README.md).\n\n##

Approach\n\nDeepSeek-R1’s strong coding capabilities enable it to act '

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Model response ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

From the webpage, we have the following benchmark results on a subset of the GAIA validation split:

- Claude 3.5 Sonnet: 53.1% correct

- DeepSeek R1: 65.6% correct

The page also states:

"DeepSeek-R1 outperforms Claude 3.5 Sonnet by 12.5% absolute, from 53.1% to 65.6% correct..."

Let me now calculate the relative performance increase:

Relative increase = (DeepSeek R1 - Claude 3.5 Sonnet) / Claude 3.5 Sonnet

= (65.6% - 53.1%) / 53.1%

I'll compute this to give you the precise percentage.

╭─ Code action ────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│1 claude=53.1│

│2 r1=65.6│

│3 relative_increase=(r1-claude)/claude*100│

│4 relative_increase│

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Accumulated token usage: input=6.04k, thinking=0, output=433, cache_write=0, cache_read=5.38k; Accumulated costs: 0.007

USD

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Execution result ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

23.540489642184543

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Model response ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

The relative performance increase from Claude 3.5 Sonnet to DeepSeek R1 on the GAIA validation subset benchmark is

approximately 23.5%.

- Claude 3.5 Sonnet scored 53.1% correct

- DeepSeek R1 scored 65.6% correct

- Relative increase = ((65.6 - 53.1) / 53.1) × 100 ≈ 23.5%

This means DeepSeek R1 was about 23.5% better than Claude 3.5 Sonnet on this benchmark.

Accumulated token usage: input=9.68k, thinking=0, output=552, cache_write=0, cache_read=8.83k; Accumulated costs: 0.011

USD

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ User message ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

'q': quit, Alt+Enter: newline

> q